Floating point numbers are a crucial yet complex concept in programming interviews. Whether you’re an aspiring developer prepping for your first coding interview or a seasoned engineer looking to brush up, you need to thoroughly understand how floating point numbers work

In this comprehensive guide, we’ll break down what floating point numbers are, common floating point number interview questions you may encounter, example responses, and expert tips to help you nail this tricky topic. By the end, you’ll feel fully prepared to discuss floating point numbers and implement them in code during your next interview.

What Are Floating Point Numbers?

Let’s start with the basics Floating point numbers are a way to represent real numbers in computing. They can support a wide range of values needed for scientific and engineering applications.

The decimal (or radix) point can “float” to different places among the number’s significant digits, which is where the term “floating point” comes from. This allows a large range of values to be represented compactly.

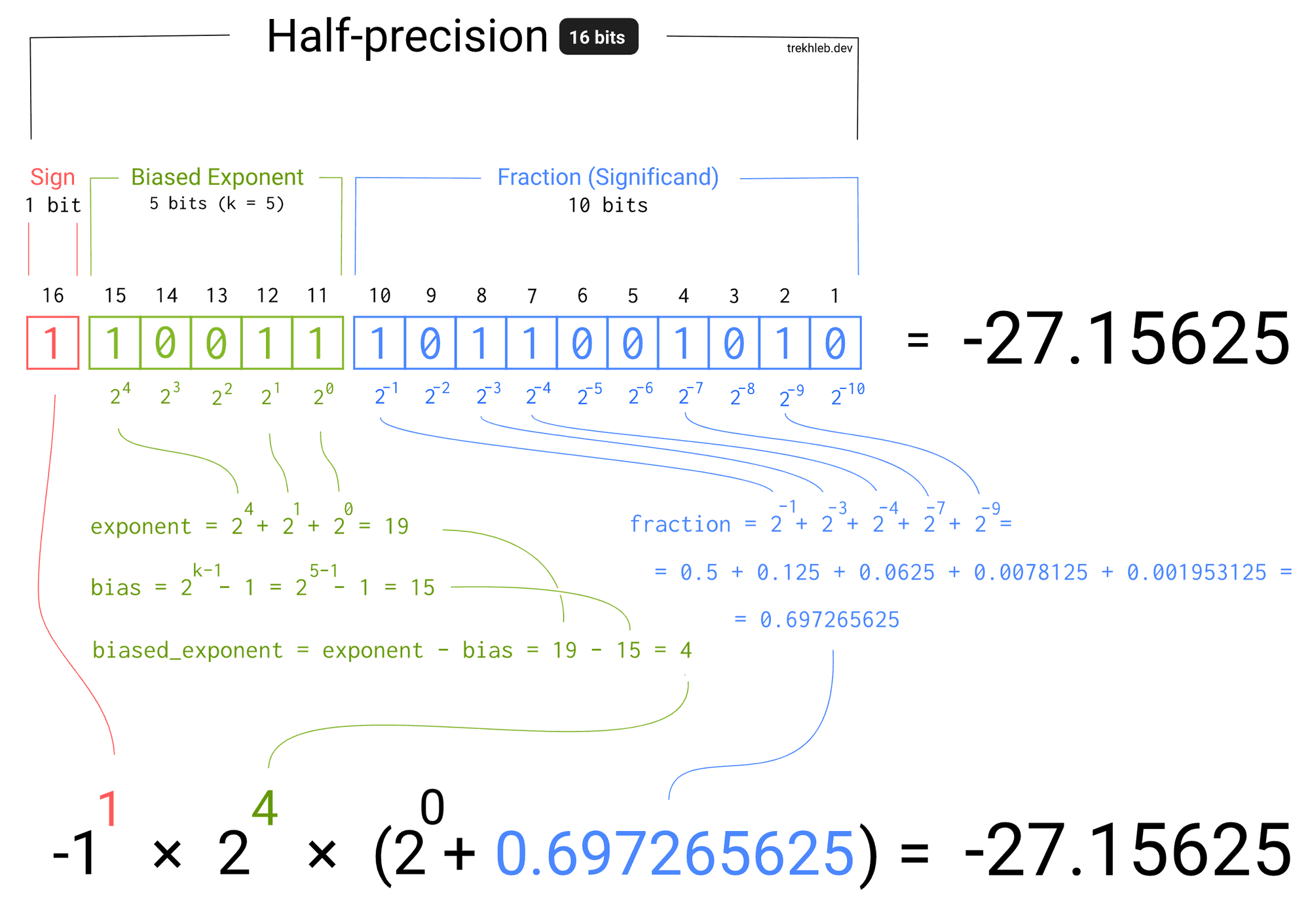

Under the hood, floating point numbers contain:

- A sign bit encoding whether the number is positive or negative

- An exponent encoding the power of two scale factor

- A mantissa encoding the significant digits

By adjusting the exponent to fit, normalizing the mantissa lets a wide range of values be shown. This gives floating point numbers their flexibility.

Nearly all programming languages support floating point types like float and double in C/C++, Float and Double in Java, or float and double in Python. Understanding how they work is key to using them effectively and debugging weird behaviors.

Common Floating Point Interview Questions

Floating point numbers come up frequently in coding interviews. Here are some of the most common questions on this topic:

Precision Issues

-

Explain why

0.1 + 0.2 == 0.3evaluates to false in many languages. -

How can you check for equality between floating point numbers with a tolerance?

Representation

-

How are floating point numbers represented in memory?

-

How does normalization work for floating point numbers?

-

What are pros and cons of different floating point precisions like single vs. double?

Operations

-

How does rounding work for floating point addition, subtraction, multiplication and division?

-

What causes errors like loss of precision in floating point calculations?

-

How are conditionals like <, >, == handled for floating point numbers?

Hardware

-

How do GPUs and FPUs handle floating point operations?

-

What is IEEE 754 and how does it standardize floating point arithmetic?

Thoroughly studying floating point number formats, precision, operations, and hardware handling ensures you can intelligently discuss this topic.

Sample Floating Point Interview Questions and Responses

Let’s walk through examples of common floating point interview questions along with strong responses:

Question: Explain why comparing equality between two floating point numbers using == often fails.

Sample Response: Equality checks between floating point numbers can fail due to loss of precision during calculations. This happens because floating point numbers are encoded in binary but decimal numbers like 0.1 cannot be precisely represented in binary. This causes tiny inaccuracies when doing arithmetic, leading to equality checks failing. For instance, in many languages 0.1 + 0.2 ends up subtly different than 0.3 when computed due to the imprecise binary representation. The best practice is to check that two floating point numbers are close within a small error threshold.

Question: Walk me through how floating point numbers are represented in memory.

Sample Response: Floating point numbers contain three components encoded in bits: a sign bit denoting positive or negative, an exponent indicating a power of two scale factor, and a mantissa containing the significant digits. For example, in a 32-bit single precision float, 1 bit is the sign, 8 bits are the exponent, and 23 bits are the mantissa. The mantissa is normalized so the most significant digit is always 1, allowing greater precision to be represented efficiently. By adjusting the exponent, a wide range of values can be represented compactly in just 32 bits.

Question: What causes loss of precision issues in floating point calculations?

Sample Response: Precision loss happens for a couple reasons. First, converting back and forth between decimal and binary representations introduces small inaccuracies since decimals have an infinite number of digits while floats are approximated with a fixed number of binary digits. Precision can also be lost when the result of a calculation exceeds what the exponent and mantissa can accurately represent. In that case, the float will be rounded, introducing error. Carrying out many sequential calculations compounds these errors, so floating point operations should be carefully ordered to minimize precision loss when possible.

Question: How does the GPU handle floating point operations compared to the CPU?

Sample Response: GPUs are designed to parallelize floating point calculations, especially vector and matrix math needed for graphics. They contain many smaller floating point units optimized for throughput over lowest latency. GPU floating point units also typically support lower precision calculations like 16-bit floats. In contrast, CPUs have higher precision floating point units better suited for general math. Modern CPU instruction sets also include vector units to accelerate sequential floating point math. GPUs excel when massive parallelism is needed, while CPUs are better for general purpose floating point operations.

The key is anticipating the types of technical questions you may get on floating point numbers and being able to clearly explain the concepts, procedures, and considerations involved.

Tips for Acing Floating Point Interview Questions

Here are some top tips for mastering floating point questions during interviews:

-

Practice explaining concepts visually – Draw diagrams showing how floats are encoded in memory, normalization, rounding, etc. Visuals can aid understanding significantly.

-

Use examples to illustrate points – Floating point precision failures, rounding behavior, comparisons, etc. can be demonstrated concretely with code snippets.

-

Relate concepts back to real-world uses – Discuss how floats enable scientific computing, 3D graphics, simulations, etc. This shows the value beyond academic details.

-

Admit the limitations – Floats have precision tradeoffs. Identifying these transparently is better than overstating capabilities.

-

Clarify your assumptions – State if you are assuming 32 vs. 64-bit floats, a specific language’s behavior, etc.

With practice and these techniques, you can master floating point number questions in any coding interview. The key is understanding concepts thoroughly and relating them back to practical programming. Be prepared to get hands-on and write examples illustrating your points. This ability to discuss a complex topic clearly while grounding it in real code will impress interviewers and highlight your technical communication skills.

Put Your Floating Point Knowledge to Work

This guide only scratches the surface of the depth and complexity of floating point math. But preparing explanations for common questions, practicing your responses, and grounding discussions in concrete examples will help instill mastery of the core concepts.

Floating point numbers come up frequently across languages and problem domains. Mastering this tricky topic will serve you well throughout your programming career, starting with acing the floating point questions in your next coding interview.

Floating Point Numbers – Computerphile

FAQ

How would you explain a floating-point number?

What are the three components of a floating-point number?

What do floating-point numbers always have?